Introduction

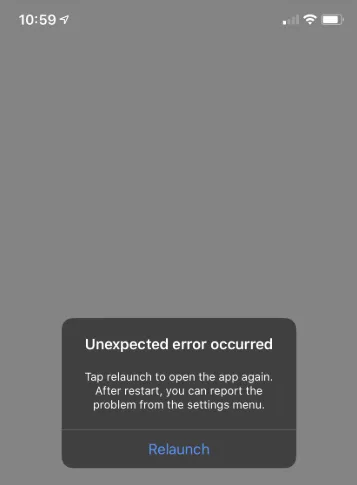

Imagine it’s 10:59 AM and you’re booking a movie ticket on your phone. You tap “Confirm”, only to be met with an error message:

“Unexpected error occurred.

Tap relaunch to open the app again.

After restart, you can report the problem from the settings menu.”

This brief moment of frustration raises several questions:

- Why did this happen?

- Did my booking go through?

- Do I have to start all over again?

Behind the scenes, a dedicated team of Site Reliability Engineers (SREs) is already working to ensure that such interruptions remain minimal. This post explores how SRE transforms reactive troubleshooting into proactive, automated resilience.

1. What Is SRE and Why Does It Matter?

Site Reliability Engineering (SRE) integrates software development practices with IT operations. The goal is to build systems that are reliable, scalable, and self-healing. In essence, SRE bridges the gap between writing code and maintaining it in production—ensuring that errors are resolved before they impact users.

For further insight into SRE principles, refer to Google’s SRE Book.

2. Traditional IT vs. SRE: From Firefighting to Smart Infrastructure

| Approach | Analogy | Methodology |

|---|---|---|

| Traditional IT | Waiting for a server to crash is like calling firefighters after a fire. | Reactive troubleshooting and manual fixes. |

| SRE | Building a system with smoke detectors, sprinklers, and backup generators. | Proactive monitoring, automation, and self-healing mechanisms. |

SRE is about anticipating issues—integrating early warning systems and automated recovery processes so that disruptions are minimized or prevented entirely.

3. The “Unexpected Error” Nobody Wants

Unexpected errors can result from various challenges:

- Traffic Spikes: Sudden surges (like thousands of users buying concert tickets) can overload systems. SRE leverages auto-scaling to add capacity dynamically.

- Buggy Code: New features might inadvertently introduce crashes. Automated testing and canary deployments help identify and isolate these issues.

- Third-Party Failures: Dependencies, such as payment gateways (e.g., Stripe), can occasionally fail. Circuit breakers and graceful degradation strategies ensure minimal impact.

Without SRE, these issues could result in prolonged downtime. With SRE, problems are often resolved before you even hit “Relaunch.”

4. How SRE Prevents and Resolves Failures

SRE teams emphasize proactive monitoring and automated recovery through:

Real-Time Alerts

- Tools like Prometheus, Grafana, and New Relic continuously monitor system metrics (e.g., server load, error rates).

- Instant alerts enable engineers to address issues before users are affected.

Self-Healing Systems

Automated scripts can:

- Restart crashed services.

- Shift traffic to healthy servers.

- Roll back recent updates if necessary.

Blameless Post-Mortems

After an incident, teams focus on identifying systemic improvements rather than assigning blame, ensuring that lessons learned lead to more robust systems.

For a deeper dive into incident analysis, explore Google’s Incident Analysis.

5. A Tale of Two Outages

Scenario A: Without SRE

- 10:59 AM: A server becomes overloaded during peak traffic.

- 11:05 AM: Users start reporting errors; support tickets begin to flood in.

- 11:30 AM: Engineers manually reboot servers in a frantic effort to restore service.

- 11:45 AM: Service is restored, but customer trust has been compromised.

Scenario B: With SRE

- 10:58 AM: Monitoring systems detect a surge in traffic.

- 10:58 AM: Auto-scaling instantly adds backup servers.

- 10:59 AM: You successfully book your ticket—without interruption.

- 11:00 AM: Excess capacity is gracefully scaled down as traffic normalizes.

In Scenario B, the “Unexpected error” is averted through the proactive measures of SRE.

6. The SRE Toolbox: Key Practices

Monitoring & Alerting

- Tools: Prometheus, Grafana, and New Relic track critical performance metrics.

- Objective: Detect issues such as slow database queries or memory leaks before they escalate.

Auto-Scaling

- Tools: Platforms like Kubernetes and AWS Auto Scaling dynamically manage server capacity.

- Objective: Ensure that systems can handle traffic surges without degradation in performance.

Error Budgets

- Concept: Recognize that 100% uptime is impractical. For example, a 99.9% uptime target permits up to 43 minutes of downtime per month.

- Objective: Strike a balance between innovation and reliability.

Chaos Engineering

- Tools: Services like Netflix’s Chaos Monkey simulate failures to test system resilience.

- Objective: Proactively expose and fix vulnerabilities.

7. A Day Saved by SRE: User Story

- Action: You tap “Confirm” to book your movie ticket.

- Incident: A server fails due to a memory leak from a recent update.

- Response: Monitoring systems alert the SRE team, triggering an automated rollback to a stable version.

- Recovery: Traffic is seamlessly shifted to healthy servers.

- Outcome: Your payment processes without interruption, and you remain blissfully unaware of the near-miss.

This seamless experience is not a stroke of luck—it’s the result of robust SRE practices.

Conclusion

The next time you encounter an error message, remember that behind the scenes, Site Reliability Engineering is hard at work to prevent prolonged downtime. By prioritizing automation, proactive monitoring, and rapid recovery, SRE transforms potential disasters into mere blips.

Pro Tip: If you see an error, try relaunching the app. Chances are, SRE has already resolved the issue.

Coming Up Next

In our next post, Site Reliability Engineering Fundamentals: Principles and Practices for Modern IT, we’ll dive deeper into the core principles and practical strategies that empower teams to build resilient, scalable systems. Stay tuned to learn how these foundational practices drive modern IT operations.